Bootstrapping Vinum: A Foundation for Reliable Servers

Robert A. Van Valzah

$Id: article.sgml,v 1.11 2003/02/01 17:34:02 keramida Exp $Copyright © 2001 by Robert A. Van Valzah

$Date: 2003/02/01 17:34:02 $ GMT

In the most abstract sense, these instructions show how to build a pair of disk drives where either one is adequate to keep your server running if the other fails. Life is better if they are both working, but your server will never die unless both disk drives die at once. If you choose ATAPI drives and use a fairly generic kernel, you can be confident that either of these drives can be plugged into most any main board to produce a working server in a pinch. The drives need not be identical. These techniques work equally well with SCSI drives as they do with ATAPI, but I will focus on ATAPI here because main boards with this interface are ubiquitous. After building the foundation of a reliable server as shown here, you can expand to as many disk drives as necessary to build the failure-resilient server of your dreams.

1. Introduction

Any machine that is going to provide reliable service needs to have either redundant components on-line or a pool of off-line spares that can be promptly swapped in. Commodity PC hardware makes it affordable for even small organizations to have some spare parts available that could be pressed into service following the failure of production equipment. In many organizations, a failed power supply, NIC, memory, or main board could easily be swapped with a standby in a matter of minutes and be ready to return to production work.

If a disk drive fails, however, it often has to be restored from a tape backup. This may take many hours. With disk drive capacities rising faster than tape drive capacities, the time needed to restore a failed disk drive seems to increase as technology progresses.

Vinum is a volume manager for FreeBSD that provides a standard block I/O layer interface to the filesystem code just as any hardware device driver would. It works by managing partitions of type vinum and allows you to subdivide and group the space in such partitions into logical devices called volumes that can be used in the same way as disk partitions. Volumes can be configured for resilience, performance, or both. Experienced system administrators will immediately recognize the benefits of being able to configure each filesystem to match the way it is most often used.

In some ways, Vinum is similar to ccd(4), but it is far more flexible and robust in the face of failures. It is only slightly more difficult to set up than ccd(4). ccd(4) may meet your needs if you are only interested in concatenation.

1.1. Terminology

Discussion of storage management can get very tricky simply because of the terminology involved. As we will see below, the terms disk, slice, partition, subdisk, and volume each refer to different things that present the same interface to a kernel function like swapping. The potential for confusion is compounded because the objects that these terms represent can be nested inside each other.

I will refer to a physical disk drive as a spindle. A partition here means a BSD partition as maintained by disklabel. It does not refer to slices or BIOS partitions as maintained by fdisk.

1.2. Vinum Objects

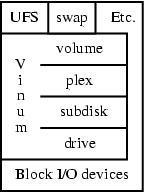

Vinum defines a hierarchy of four objects that it uses to manage storage (see Figure 1). Different combinations of these objects are used to achieve failure resilience, performance, and/or extra capacity. I will give a whirlwind tour of the objects here--see the Vinum web site for a more thorough description.

The top object, a vinum volume, implements a virtual disk that provides a standard block I/O layer interface to other parts of the kernel. The bottom object, a vinum drive, uses this same interface to request I/O from physical devices below it.

In between these two (from top to bottom) we have objects called a vinum plex and a vinum subdisk. As you can probably guess from the name, a vinum subdisk is a contiguous subset of the space available on a vinum drive. It lets you subdivide a vinum drive in much the same way that a disk BSD partition lets you subdivide a BIOS slice.

A plex allows subdisks to be grouped together making the space of all subdisks available as a single object.

A plex can be organized with its constituent subdisks concatenated or striped. Both organizations are useful for spreading I/O requests across spindles since plexes reside on distinct spindles. A striped plex will switch spindles each time a multiple of the stripe size is reached. A concatenated plex will switch spindles only when the end of a subdisk is reached.

An important characteristic of a Vinum volume is that it can be made up of more than one plex. In this case, writes go to all plexes and a read may be satisfied by any plex. Configuring two or more plexes on distinct spindles yields a volume that is resilient to failure.

Vinum maintains a configuration that defines instances of the above objects and the way they are related to each other. This configuration is automatically written to all spindles under Vinum management whenever it changes.

1.3. Vinum Volume/Plex Organization

Although Vinum can manage any number of spindles, I will only cover scenarios with two spindles here for simplification. See Table 1 to see how two spindles organized with Vinum compare to two spindles without Vinum.

Table 1. Characteristics of Two Spindles Organized with Vinum

| Organization | Total Capacity | Failure Resilient | Peak Read Performance | Peak Write Performance |

|---|---|---|---|---|

| Concatenated Plexes | Unchanged, but appears as a single drive | No | Unchanged | Unchanged |

| Striped Plexes (RAID-0) | Unchanged, but appears as a single drive | No | 2x | 2x |

| Mirrored Volumes (RAID-1) | 1/2, appearing as a single drive | Yes | 2x | Unchanged |

Table 1 shows that striping yields the same capacity and lack of failure resilience as concatenation, but it has better peak read and write performance. Hence we will not be using concatenation in any of the examples here. Mirrored volumes provide the benefits of improved peak read performance and failure resilience--but this comes at a loss in capacity.

Note: Both concatenation and striping bring their benefits over a single spindle at the cost of increased likelihood of failure since more than one spindle is now involved.

When three or more spindles are present, Vinum also supports rotated, block-interleaved parity (also called RAID-5) that provides better capacity than mirroring (but not quite as good as striping), better read performance than both mirroring and striping, and good failure resilience. There is, however, a substantial decrease in write performance with RAID-5. Most of the benefits become more pronounced with five or more spindles.

The organizations described above may be combined to provide benefits that no single organization can match. For example, mirroring and striping can be combined to provide failure-resilience with very fast read performance.

1.4. Vinum History

Vinum is a standard part of even a "minimum" FreeBSD distribution and it has been standard since 3.0-RELEASE. The official pronunciation of the name is VEE-noom.

Vinum was inspired by the Veritas Volume Manager, but was not derived from it. The name is a play on that history and the Latin adage In Vino Veritas (Vino is the ablative form of Vinum). Literally translated, that is ``Truth lies in wine'' hinting that drunkards have a hard time lying.

I have been using it in production on six different servers for over two years with no data loss. Like the rest of FreeBSD, Vinum provides ``rock-stable performance.'' (On a personal note, I have seen Vinum panic when I misconfigured something, but I have never had any trouble in normal operation.) Greg Lehey wrote Vinum for FreeBSD, but he is seeking help in porting it to NetBSD and OpenBSD.

Warning: Just like the rest of FreeBSD, Vinum is undergoing continuous development. Several subtle, but significant bugs have been fixed in recent releases. It is always best to use the most recent code base that meets your stability requirements.

1.5. Vinum Deployment Strategy

Vinum, coupled with prudent partition management, lets you keep ``warm-spare'' spindles on-line so that failures are transparent to users. Failed spindles can be replaced during regular maintenance periods or whenever it is convenient. When all spindles are working, the server benefits from increased performance and capacity.

Having redundant copies of your home directory does not help you if the spindle holding root, /usr, or swap fails on your server. Hence I focus here on building a simple foundation for a failure-resilient server covering the root, /usr, /home, and swap partitions.

Warning: Vinum mirroring does not remove the need for making backups! Mirroring cannot help you recover from site disasters or the dreaded rm -r -f / command.

1.6. Why Bootstrap Vinum?

It is possible to add Vinum to a server configuration after it is already in production use, but this is much harder than designing for it from the start. Ironically, Vinum is not supported by /stand/sysinstall and hence you cannot install /usr right onto a Vinum volume.

Note: Vinum currently does not support the root filesystem (this feature is in development).

Hence it is a bit tricky to get started using Vinum, but these instructions take you though the process of planning for Vinum, installing FreeBSD without it, and then beginning to use it.

I have come to call this whole process ``bootstrapping Vinum.'' That is, the process of getting Vinum initially installed and operating to the point where you have met your resilience or performance goals. My purpose here is to document a Vinum bootstrapping method that I have found that works well for me.

1.7. Vinum Benefits

The server foundation scenario I have chosen here allows me to show you examples of configuring for resilience on /usr and /home. Yet Vinum provides benefits other than resilience--namely performance, capacity, and manageability. It can significantly improve disk performance (especially under multi-user loads). Vinum can easily concatenate many smaller disks to produce the illusion of a single larger disk (but my server foundation scenario does not allow me to illustrate these benefits here).

For servers with many spindles, Vinum provides substantial benefits in volume management, particularly when coupled with hot-pluggable hardware. Data can be moved from spindle to spindle while the system is running without loss of production time. Again, details of this will not be given here, but once you get your feet wet with Vinum, other documentation will help you do things like this. See "The Vinum Volume Manager" for a technical introduction to Vinum, vinum(8) for a description of the vinum command, and vinum(4) for a description of the vinum device driver and the way Vinum objects are named.

Note: Breaking up your disk space into smaller and smaller partitions has the benefit of allowing you to ``tune'' for the most common type of access and tends to keep disk hogs ``within their pens.'' However it also causes some loss in total available disk space due to fragmentation.

1.8. Server Operation in Degraded Mode

Some disk failures in this two-spindle scenario will result in Vinum automatically routing all disk I/O to the remaining good spindle. Others will require brief manual intervention on the console to configure the server for degraded mode operation and a quick reboot. Other than actual hardware repairs, most recovery work can be done while the server is running in multi-user degraded mode so there is as little production impact from failures as possible.

I give the instructions in Section 4 needed to configure the server for degraded mode operation in those cases where Vinum cannot do it automatically. I also give the instructions needed to return to normal operation once the failed hardware is repaired. You might call these instructions Vinum failure recovery techniques.

I recommend practicing using these instructions by recovering from simulated failures. For each failure scenario, I also give tips below for simulating a failure even when your hardware is working well. Even a minimum Vinum system as described in Section 1.10 below can be a good place to experiment with recovery techniques without impacting production equipment.

1.9. Hardware RAID vs. Vinum (Software RAID)

Manual intervention is sometimes required to configure a server for degraded mode because Vinum is implemented in software that runs after the FreeBSD kernel is loaded. One disadvantage of such software RAID solutions is that there is nothing that can be done to hide spindle failures from the BIOS or the FreeBSD boot sequence. Hence the manual reconfiguration of the server for degraded operation mentioned above just informs the BIOS and boot sequence of failed spindles. Hardware RAID solutions generally have an advantage in that they require no such reconfiguration since spindle failures are hidden from the BIOS and boot sequence.

Hardware RAID, however, may have some disadvantages that can be significant in some cases:

-

The hardware RAID controller itself may become a single point of failure for the system.

-

The data is usually kept in a proprietary format so that a disk drive cannot be simply plugged into another main board and booted.

-

You often cannot mix and match drives with different sizes and interfaces.

-

You are often limited to the number of drives supported by the hardware RAID controller (often only four or eight).

Tip: Keep your kernel fairly generic (or at least keep /kernel.GENERIC around). This will improve the chances that you can come back up on ``foreign'' hardware more quickly.

The pros and cons discussed above suggest that the root filesystem and swap partition are good candidates for hardware RAID if available. This is especially true for servers where it is difficult for administrators to get console access (recall that this is sometimes required to configure a server for degraded mode operation). A server with only software RAID is well suited to office and home environments where an administrator can be close at hand.

Note: A common myth is that hardware RAID is always faster than software RAID. Since it runs on the host CPU, Vinum often has more CPU power and memory available than a dedicated RAID controller would have. If performance is a prime concern, it is best to benchmark your application running on your CPU with your spindles using both hardware and software RAID systems before making a decision.

1.10. Hardware for Vinum

These instructions may be timely since commodity PC hardware can now easily host several hundred gigabytes of reasonably high-performance disk space at a low price. Many disk drive manufactures now sell 7,200 RPM disk drives with quite low seek times and high transfer rates through ATA-100 interfaces, all at very attractive prices. Four such drives, attached to a suitable main board and configured with Vinum and prudent partitioning, yields a failure-resilient, high performance disk server at a very reasonable cost.

However, you can indeed get started with Vinum very simply. A minimum system can be as simple as an old CPU (even a 486 is fine) and a pair of drives that are 500 MB or more. They need not be the same size or even use the same interface (i.e., it is fine to mix ATAPI and SCSI). So get busy and give this a try today! You will have the foundation of a failure-resilient server running in an hour or so!